BinaryConnect: Training Deep Neural Networks with

binary weights during propagations

https://arxiv.org/abs/1511.00363

https://github.com/MatthieuCourbariaux/BinaryConnect

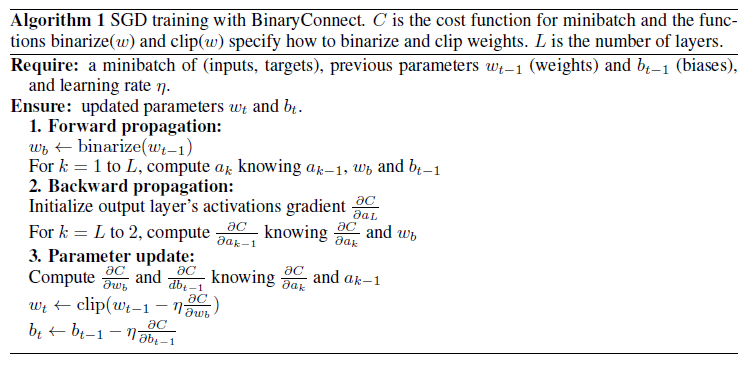

Deterministic vs stochastic binarization

Deterministic binarization

$$\omega_b = \begin{cases} +1 & \text{if} \quad \omega \ge 0\ -1 & \text{otherwise } \end{cases}$$

stochastic binarization

$$$$$$\omega_b = \begin{cases} +1 & \text{with probability} \quad p = \sigma(\omega)\ -1 & \text{with probability }1-p \end{cases}$$

where $$\sigma$$ is the "hard sigmoid" function:

$$\sigma(x) = clip(\frac{x+1}{2},0,1) = max(0, min(1,\frac{x+1}{2}))$$

tricks:

- batch normalization: reduces the overall impact of the weights scale

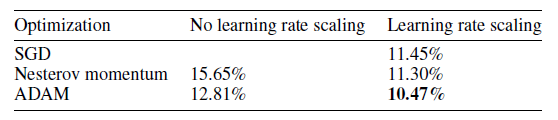

- ADAM

- sgd, Nesterov moment

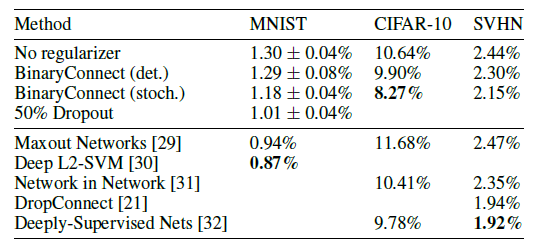

Results