Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding

https://arxiv.org/abs/1510.00149

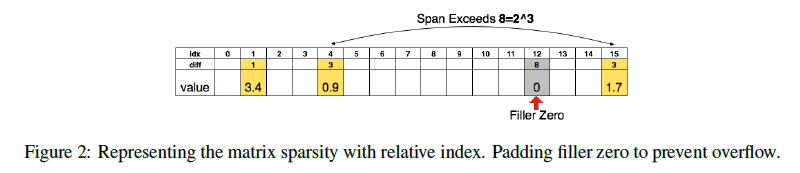

Step 1: Pruning

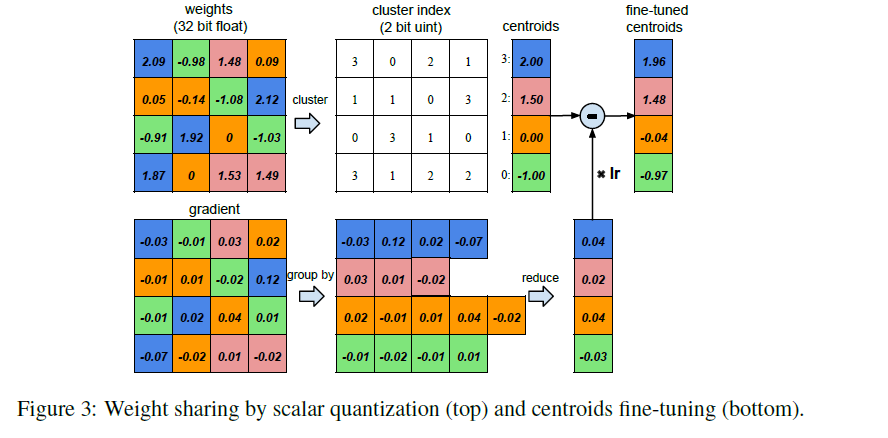

Step 2 Quantization

Initialization:

Forgy (random) initialization randomly chooses k observations from the data set and uses these as

the initial centroids.Density-based initialization linearly spaces the CDF of the weights in the y-axis, then finds the

horizontal intersection with the CDF, and finally finds the vertical intersection on the x-axis, which

becomes a centroid,Linear initialization linearly spaces the centroids between the [min, max] of the original weights.