INCREMENTAL NETWORK QUANTIZATION: TOWARDS

LOSSLESS CNNS WITH LOW-PRECISION WEIGHTS

https://arxiv.org/abs/1702.03044

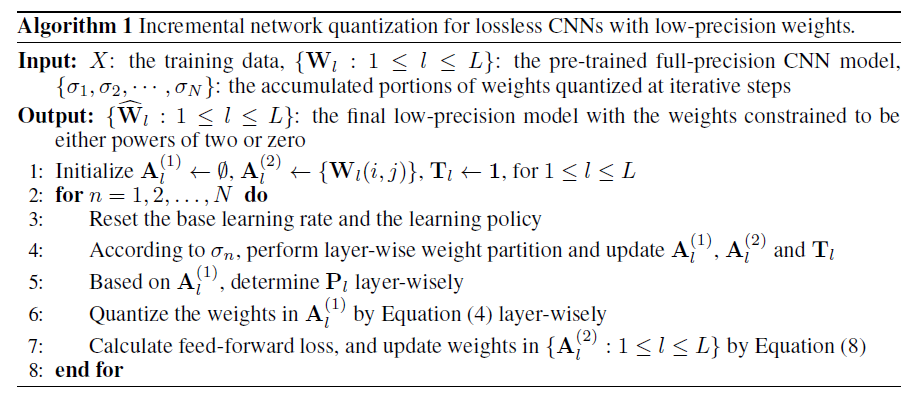

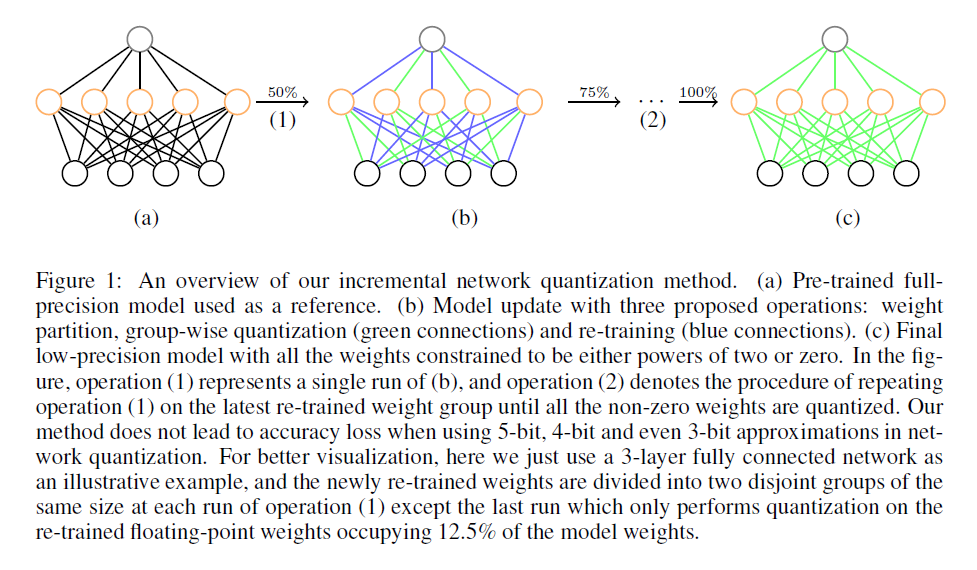

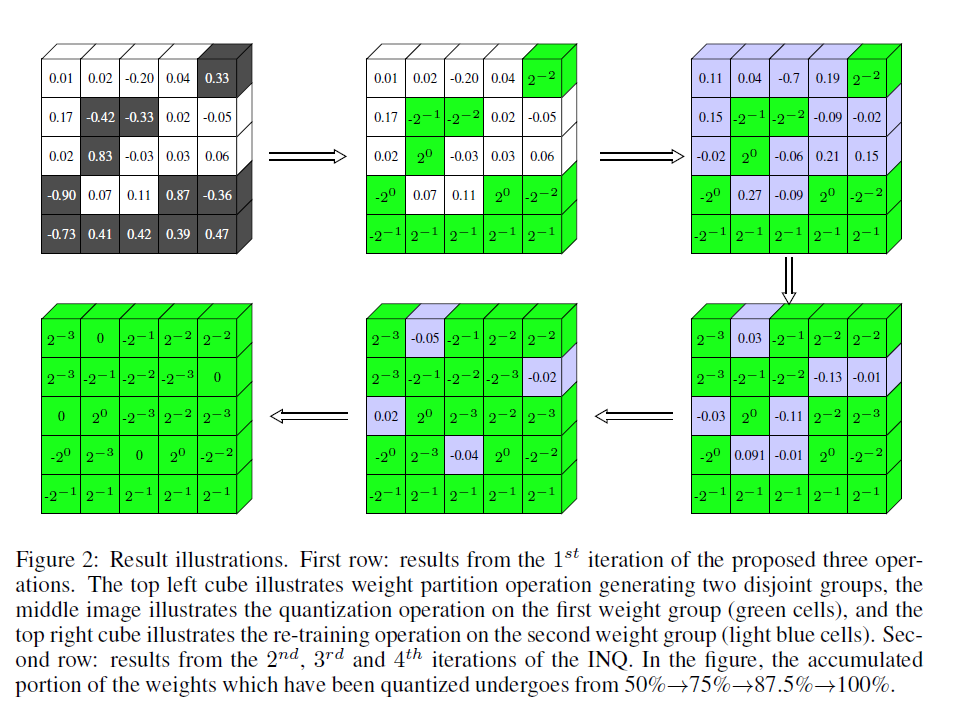

three interdependent operations:

weight partition,

to divide the weights in each layer of a pre-trained full-precision CNN model into two disjoint groups

group-wise quantization

The weights in the first group are responsible for forming a low-precision base for the originalmodel, thus they are quantized by using Equation (4).

The weights in the second group adapt to compensate for the loss in model accuracy, thus they are the ones to be re-trained.

re-training.

Once the first run of the quantization and re-training operations is finished, all the three operations are further conducted on the second weight group in an iterative manner, until all the weights are converted to be either powers of two or zero, acting as an incremental network quantization and accuracy enhancement procedure.